The study of human language is surprisingly contentious. There are two camps, the words and rules camp and the connectionist camp. (There are perhaps more, but as I understand it, these are the two serious contenders.) The words and rules camp begins with Noam Chomsky's work on language in which he proposes the idea of generative grammar. There are many nuances and subtleties to it, that I don't even begin to understand. But I'll try to explain it as best I understand and remember it.

The rough words and rules idea (sensu Pinker which is an extension of Chomskian theory) is that the a baby's brain is not a blank slate (tabula rasa) when it comes to the syntax of language. What children are programmed to do as the learn language, is to treat what they hear in structured ways. During the acquisition of language stage they both abstract rules from what they hear and learn the meaning of the words. There are two types of memory that get engaged in this: procdeural memory, the procedure of transforming words to be used in a sentence and lexical memory, which learns the semantic meaning of the words.

The other ideas are called connectionist or emergentist or structural. (I'm less familiar with them, so forgive me if I give them a bit of the short shrift.) They suggest that children's brains simply make statistical models of language to allow them to learn it. They don't abstract rules but learn the statistical regularrities in language. These approaches are often accompanied by neural network models which they then train with a lot of text and see what language they are capable of producing. I personally find them a bit unsatisfying, but they keep the debates over language fresh and engaging.

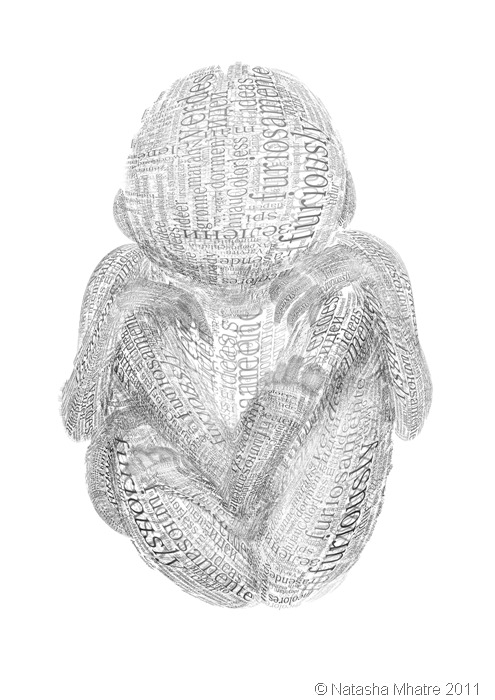

Over the years much arguement and fighting has ensued. In one of his moments, when Chomsky was ridiculing the probabilistic model of language, he coined the phrase, 'Colourless green ideas sleep furiously'. Its a phrase that could come out a statistical or purely structural model, its syntax is perfect and yet it means nothing.

The language baby word beast (and we are beasts even if we would like to seperate ourselves, often on the basis of language itself) is made of that phrase in as many languages are the wordle site can encode. The baby is based on one of my favourite drawings, a fetus seen from a truly unusual angle by a master of many things, not least art and science. The baby is an oblique look at all the arguement that is dormant within our capacity for language.

Hat tip, to Daniel Robert who formed the nucleus of this word beast idea.

2 comments:

Hi Natasha,

I saw this TED video by Deb Roy, faculty at MIT Media Labs.

The video describes his research on how his own infant baby picks up words in a context, through data audio and video data mining.

http://www.ted.com/talks/deb_roy_the_birth_of_a_word.html

I know about that one. Its on my list of need to see soon :) How're you doing these days? How is your mailing list teaching exercise going?

Post a Comment